What Changes on August 2, 2025 Under the EU AI Act — And What It Means for Your Business

The EU Artificial Intelligence Act (“AI Act”) formally entered into force on 1 August 2024, compliance obligations are being phased in over several years. The next major enforcement wave comes on 2 August 2025, with crucial implications for AI developers, providers, and users across the EU and beyond.

What happens on 2 August 2025?

On this date, several key obligations under the AI Act will become enforceable:

1. Governance Rules for General-Purpose AI Models (GPAI)

Providers of GPAI models must now comply with baseline transparency, safety, and risk management obligations. This includes:

Technical documentation: detailing model training, capabilities, and limitations.

Copyright compliance: Ensuring that data used for training respects intellectual property rights.

Risk assessments and mitigation: Identifying and reducing foreseeable risks, including bias or misuse.

Information-sharing obligations: with downstream deployers.

2. National Oversight Frameworks

Member States must establish market surveillance authorities to oversee compliance. These authorities will begin to enforce transparency and governance obligations, creating a real risk of investigation and penalties for non-compliance.

3. Governance Structures at EU Level

The EU AI Office and the European AI Board are fully operational from this point, enabling coordinated enforcement across Member States.

4. Enforcement & Penalties

Fines of up to €35 million or 7% of global turnover become active for serious violations, including non-compliance with GPAI obligations or placing prohibited systems on the market.

Obligations do not apply (yet) if your GPAI model was in the EU Market before 2 August 2025

GPAI models already on the EU market before 2 August 2025 are “grandfathered” until 2 August 2027. If your GPAI model was made available before the new obligations take effect, you have an extra two years (until 2 August 2027) to bring it into full compliance. This does not exempt you from other obligations (e.g., bans on prohibited uses) or from making significant design changes (which would trigger earlier compliance).

This buys time for providers of large, complex models to build the required governance frameworks. However, it is not a free pass! Regulators can still act against unacceptable uses or misleading practices.

The Bigger Picture: Other Key Dates

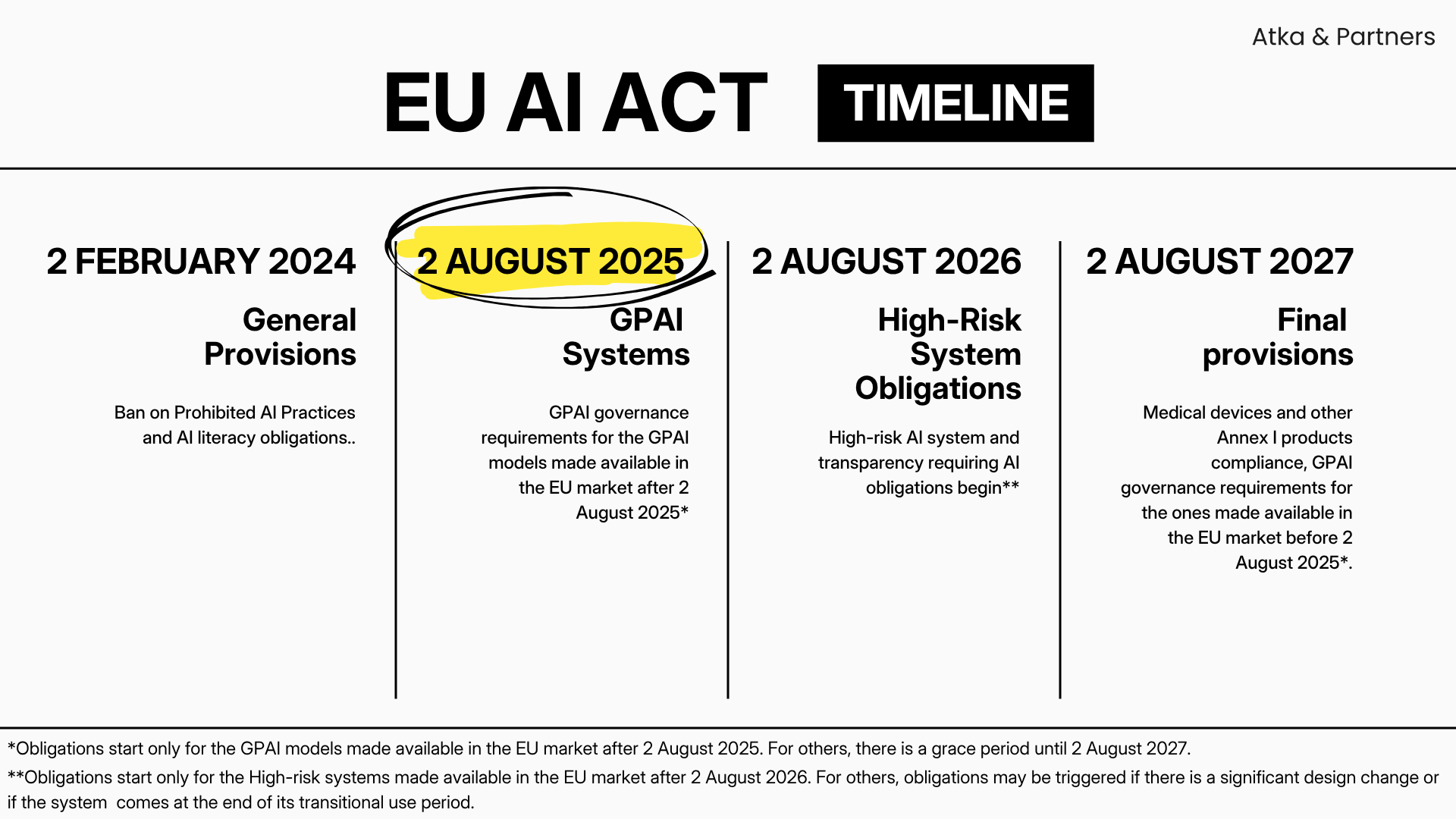

To understand the roadmap, here’s a simplified timeline of enforcement:

EU AI Act timeline of enforcement

The High-risk AI systems that were made available in the EU market before 2 August 2026, don’t have to immediately meet the new high‑risk conformity assessment requirements under the AI Act until either:

A “significant design change” is made, or

The end of their transitional use period (generally tied to product lifecycle rules under sectoral law).

This exemption was made to avoid halting existing deployments (especially in regulated sectors like health or transport) and to give companies time to adapt their compliance procedures before full high‑risk obligations kick in on 2 August 2026.

Significant design change means any modification that affects compliance with the Act (i.e., it changes the system in a way that impacts its conformity with legal requirements, including adding new high risk functionalities e.g. expanding to new Annex III use cases) or any modification of the intended purpose to use the AI system in a way not covered by its original assessment and documentation (such as major retraining or re-architecting that materially changes performance or risk profile). Minor software updates, bug fixes, or maintenance likely won’t trigger the rules, but any substantial redesign will.

In practice:

If your high‑risk AI system is already CE‑marked or approved under existing sectoral regulations (e.g., medical devices or product safety), you can keep using it without re‑certification under the AI Act, as long as you don’t significantly alter it.

But if you do any meaningful upgrade to the AI system (e.g., retrain on new datasets that change its functionality, add new high‑risk use cases), it will trigger the need for full AI Act conformity assessment.

What Should Businesses Do Now?

Whether you’re a startup building foundation models, a scale-up using third-party AI tools, or an enterprise deploying AI at scale, this is your wake-up call:

Map Your AI Systems: Identify which of your models fall under GPAI or high-risk AI categories.

Document, Document, Document: Prepare technical documentation (datasets, intended purposes, limitations).

Engage with the EU Code of Practice: Even before obligations fully apply, adopting the voluntary Code of Practice is a smart defensive move, signalling good faith to regulators and stakeholders.

Plan for Future (If Applicable): If your GPAI model is already in the EU market, use the two-year window wisely, don’t wait until 2027.

Train Your Teams: AI literacy is no longer optional, invest in staff training on ethical and legal AI deployment.

If you want a free assessment of your AI compliance strategy, feel free to reach us and sign up to our newsletter below!